AI-Powered Code & Developer Agent

A Gen AI agent for code explanation, bug fixing, and code optimization

Project Overview

This project brings the power of generative AI to the developer’s desktop through a local, offline code assistant agent that helps explain, fix, and optimize Python code. Built using LangChain, GPT4All, and the Mistral-7B model, this agent mimics the utility of ChatGPT but with total privacy and full local control. It features mode-specific prompts, file upload, downloadable results, and memory-based conversational interactions for tutoring or coding help — all without needing internet access or API keys.

Objective & Business Context

Modern developers juggle multiple tasks — writing code, debugging errors, optimizing for performance, and often teaching or onboarding others. These activities frequently require switching between tools, documentation, search engines, and forums. This project aims to eliminate that fragmentation.

The AI Code & Developer Agent is designed to:

Provide real-time explanations of Python code

Automatically detect and fix syntax or logic errors

Refactor inefficient code into cleaner, optimized versions

Support general-purpose AI conversations around programming concepts

Offer a local, cost-free alternative to online LLMs like ChatGPT

Why it Matters:

Enhances productivity by reducing cognitive load and tab-hopping

Offers learning support for students and early-career engineers

Enables secure code assistance in offline or enterprise-restricted environments

Makes AI support accessible without depending on third-party APIs

Business Value and Real-World Scope

This AI-powered code agent delivers tangible value for various user groups by enhancing their productivity, learning curve, and development quality.

Developers can gain deeper understanding of legacy code, streamline review processes, and automate repetitive debugging tasks.

Students and early-career professionals benefit from interactive explanations that bridge the gap between textbook learning and real-world coding challenges.

In privacy-sensitive environments such as enterprise software or internal tool development, this solution allows AI-powered support without exposing code to the internet.

Educators and trainers can use the assistant to walk through code logic, demonstrate best practices, and show before/after versions of fixed or optimized scripts.

Freelancers and QA testers can use the tool for quick, local debugging before client delivery, reducing turnaround time and context-switching.

By running fully offline and requiring no third-party services, the tool supports secure, consistent productivity across industries and use cases. Deployment Guide

Implementation Flow

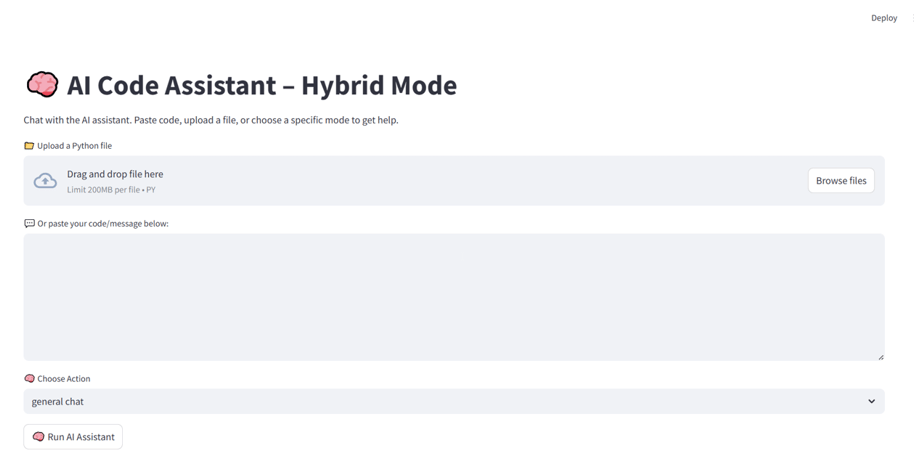

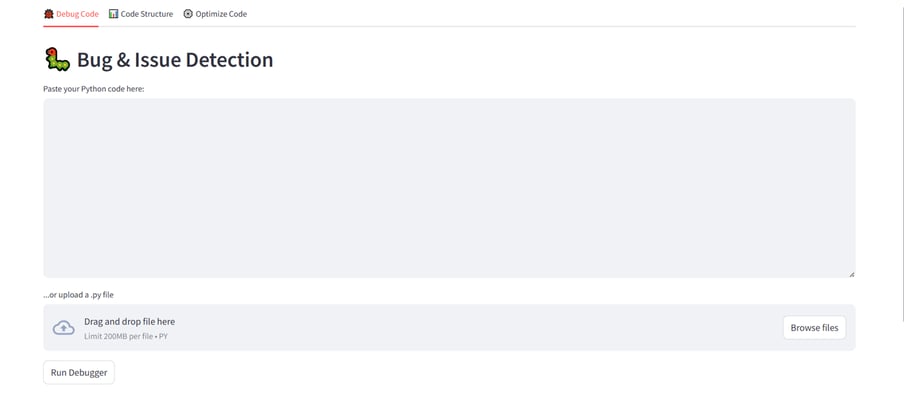

The assistant accepts Python code in two forms:

Code pasted into a text field.

Python '.py' files uploaded through the Streamlit interface.

These options allow both lightweight use (e.g., students pasting functions) and file-based workflows (e.g., reviewing full scripts).

Dataset & Input Handling

Data Preprocessing Workflow

Once code is submitted, this backend flow is triggered:

Input Validation: Checks if the file/text resembles valid Python.

File I/O: Uploaded files are copied to a secure temp directory.

Mode Resolution: Based on selected tab (Explain, Fix, Optimize, Chat).

Prompt Preparation: Corresponding prompt template is fetched and filled dynamically.

Model Execution: LangChain sends the prompt to the Mistral model using GPT4All backend.

Post-Processing: Output is formatted and displayed with download/export support.

Each use case is powered by a custom prompt template designed to elicit accurate, clear, and actionable outputs:

Explain Mode: Prompts the model to break down the code block line-by-line.

Fix Mode: Asks the model to identify bugs and rewrite corrected code.

Optimize Mode: Requests a more efficient or Pythonic rewrite with justifications.

Chat Mode: Enables open-ended conversation, memory retention, and back-and-forth learning.

Model Info:

Architecture: Mistral-7B Instruct (quantized, GGUF format)

Loader: GPT4All local runtime (llama backend)

Session Type: Stateless for single-shot prompts; memory-buffered for chat mode

Prompt Design & LLM Inference

Output Presentation & Download Handling

Output varies by use case:

Results are instantly visible in the web app.

A download button allows saving results in .txt or .md format.

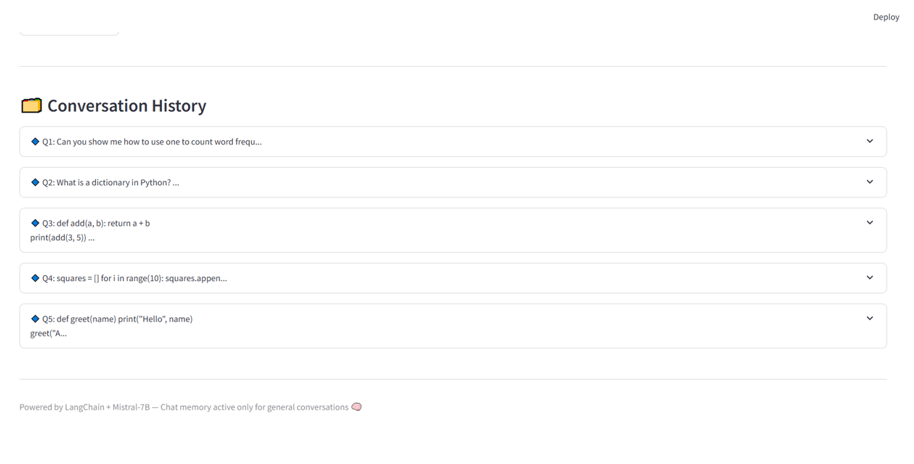

In chat mode, prior conversation history is optionally visible in a session thread (coming soon).

For each tab, outputs are decorated using code-friendly markdown formatting.

User Interface Overview

The interface is built using Streamlit for rapid local deployment. Key features include:

Tabbed Navigation: Users choose between Explain, Fix, Optimize, or Chat.

Input Modes: Paste box or file upload widget for each tab.

Response Preview: Clean block rendering for code and textual output.

Download Support: One-click export of analysis or rewritten code.

Designed for both first-time learners and advanced developers, the interface remains clean, modern, and device-compatible.

App Files & Dual Application Modes

The solution consists of two distinct Streamlit apps:

app.py: A non-agentic interface built for Explain/Fix/Optimize modes, useful for debugging and refactoring.

ai_code_assistant_app.py: A fully agentic Streamlit app built on LangChain memory, enabling multi-turn conversations and follow-up Q&A using the same local model.

Both apps use the same backend model and prompt templates, and can be extended independently or merged into a single interface as needed.

Explain

def add(a, b): return a + b

Output : “This function adds two input arguments a and b and returns the result.”

Fix

def greet(name) print("Hi")

Output : def greet(name): print("Hi")

Optimize

for i in range(10): result.append(i*i)

Output : result = [i*i for i in range(10)]

General Chat: Q: What’s the difference between a list and a set in Python?

A: Lists allow duplicates and maintain order, sets are unordered and don’t allow duplicates.

Mode-Specific Examples

From app.py

Tools and Libraries Used

Python - Core scripting and file I/O

Streamlit - Interactive UI and local hosting

LangChain - Prompt management, LLM chaining, memory buffers

GPT4All - GGUF model loader for Mistral

Mistral-7B - Core LLM for code generation and analysis

OS / Tempfile - File management and secure handling

IPython.display - Enhanced rendering and notebook compatibility

Pandas (for future versions) -Tabular logs or dataset integration

PyPDF2 / Python -docx (planned) - Multiformat upload support

Support for JavaScript, Java, and C++ code.

Export as Jupyter notebooks or .ipynb explanations.

Embed real-time syntax error highlighters.

Session memory for Explain/Fix/Optimize tabs too.

Upload multi-file projects (zip-based parsing).

Compare output from two different local LLMs.

Multi-tab chat memory with timestamped sessions.

Code search and summarization from within a project folder.

Voice input integration for hands-free coding help.

Possible Next Steps & Conclusion

Conclusion

This project showcases the power of locally hosted AI tools in solving real-world coding challenges. With the rise of open-source LLMs and low-resource runtime environments, developers no longer need to rely on costly APIs or compromise their privacy.

The AI Code & Developer Agent provides:

End-to-end local operation

Domain-aligned reasoning (Explain/Fix/Optimize)

Educational impact through real-time analysis

Enterprise readiness for compliance-sensitive industries

Whether you're a curious student, a full-time engineer, or an AI enthusiast — this tool gives you a foundation to code smarter, debug faster, and learn continuously.

The assistant isn’t just reactive — it’s strategic.

Your code, your agent, your control.

Dive into the foundational concepts, algorithms, and real-world relevance behind this project. From machine learning principles to business strategy insights, this conceptual study bridges the gap between technical implementation and applied decision-making—helping you understand not just how it works, but why it matters.

Key Concepts

GitHub Repository

Want to dive deeper into how this project actually works?

We’ve made the complete codebase and resources available for you on GitHub

👉 Access the full repository here:

Whether you're a learner, recruiter, or collaborator — there's something for everyone.

Connect

Join us in shaping the future of leadership.

Innovate

contact@youlead.com

© 2025. All rights reserved.